How do I control AI agent memory when answering my questions?

EDSL provides a variety of features for adding context of prior questions to new questions, piping answers into other questions, and asking multiple questions at once in a structured way.

A popular area of exploration with LLM-based surveys and experiments is simulating memory—i.e., giving AI agents information about prior responses when presenting new questions. This may be desirable for a variety of reasons, such as wanting to approximate a human respondent’s experience answering a survey, or ensuring that a model’s responses are consistent and coherent across survey questions.

There are several ways to explore this in EDSL, our open-source Python package for simulating surveys and experiments with AI agents and large language models.

How to explore this in EDSL

EDSL has built-in methods for adding context of questions and answers to other questions within a survey or experiment, giving you precise control over the information that is included in each prompt. These include:

Question types that prompt a model to return responses to multiple questions at once in a dictionary or list.

Methods for piping specific components of questions into other questions—e.g., to insert just the answer to a question in a follow-on question.

Methods for adding the full context of one or more prior questions and answers to the presentation of later questions.

A one-shot multi-part question might work

A simple-sounding way to give an agent full context of a survey is to include all the questions at once in a single prompt, together with instructions on how to answer them. With longer sets of detailed questions, however, this can lead to incomplete and inconsistent results. Even if a model’s context window has not been maxed out, a model may be distracted by the amount of content presented and fail to precisely follow each of many instructions presented at once. If multiple agents are answering a survey, the responses may also not be consistent among the agents, and require post-survey data cleaning.

Use a structured question

A better way to present multiple questions at once is to use a structured prompt—a presentation of the questions in such a way that, when administered multiple times to different agents, will ensure consistently formatted results to facilitate analysis.

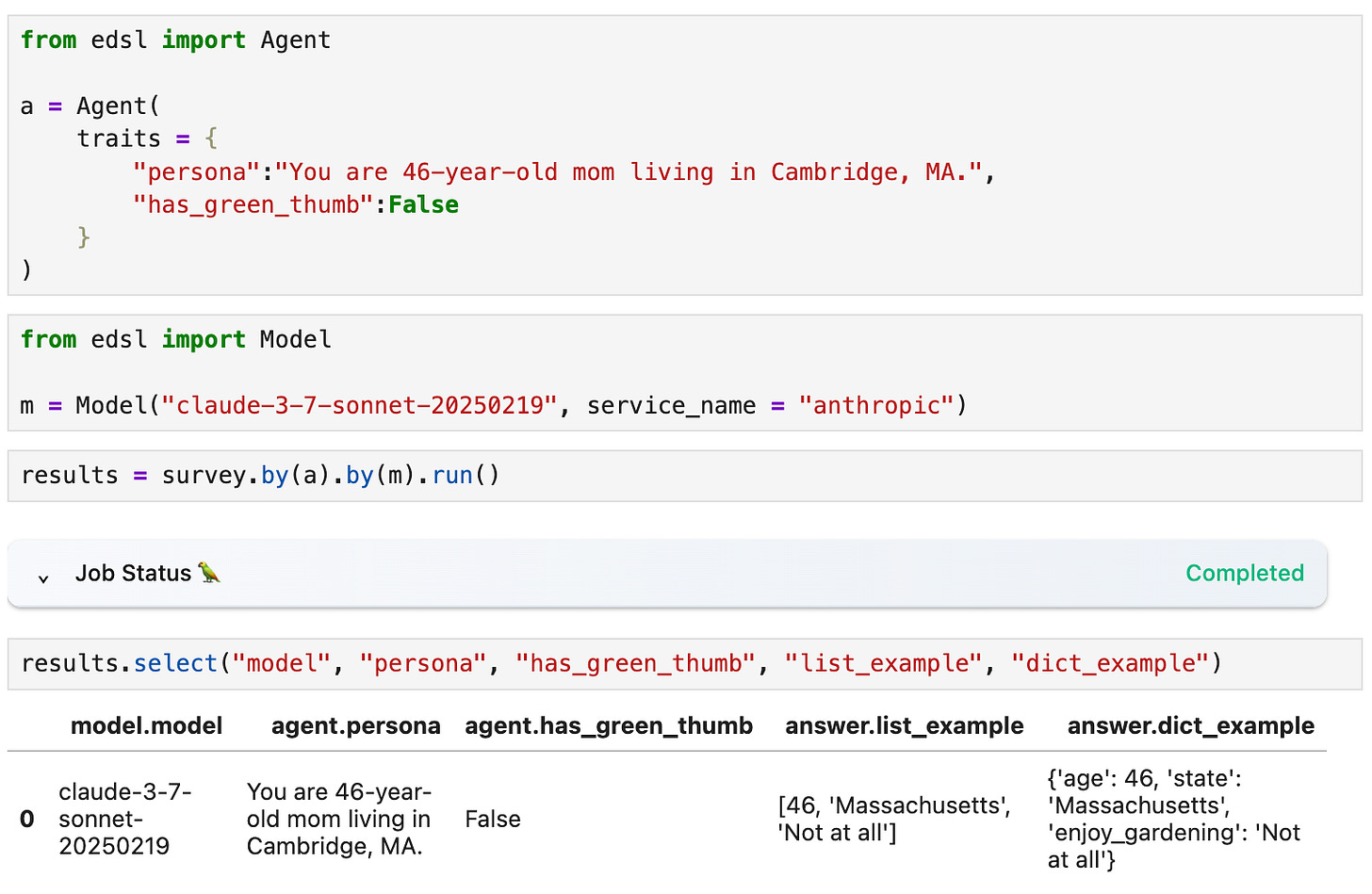

In EDSL, the question type that you choose—free text, multiple choice, numerical, etc.—determines the format of the dataset of results that is generated when a survey is sent to a model. If you want to ask multiple questions at once, a convenient question type to use may be QuestionDict or QuestionList. For example, here we present the same set of questions in both formats:

When we administer the questions to an agent and model we get the multiple responses back in the specified formats, ready for export and analysis:

We can inspect and export the results at Coop too:

Code to reproduce this example is also available in this notebook at Coop.

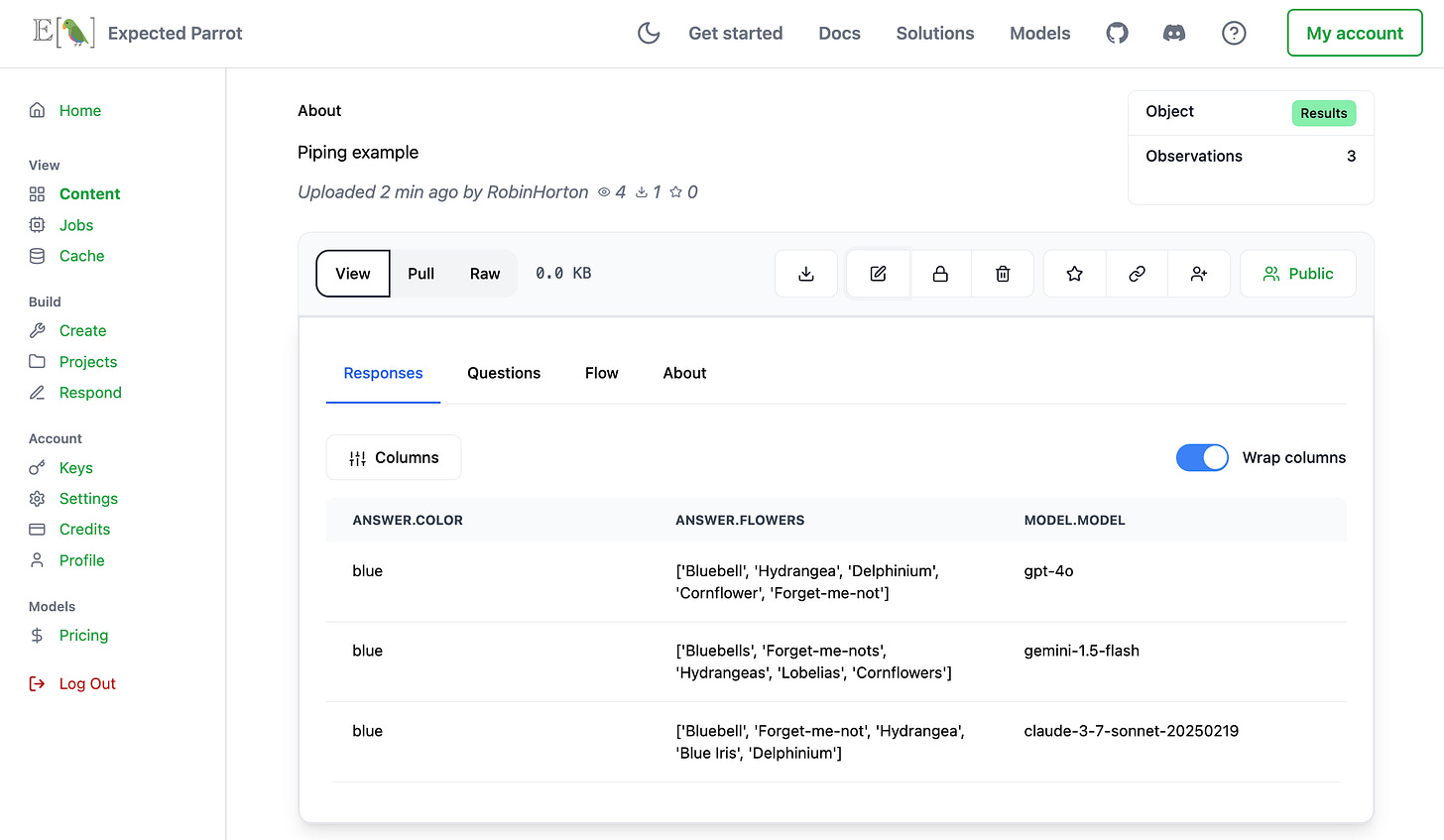

Piping answers into other questions

Another tool that allows you to precisely control the information that is included in each prompt while keeping prompts efficient is piping. Instead of piling entire questions together, you can specify exactly which parts of prior questions and answers you want to include in later questions. For example, here we just insert the response to q1 into the context of q2, allowing us to keep the context of q2 from becoming unnecessarily long:

We can also access these results at Coop:

Code to reproduce this example is also available in this notebook at Coop.

Adding context of specific prior questions

This can be especially useful when your survey has independent sub-sections of questions that only require the context of other questions in the same sub-section, or when you want to explore the impact of particular context. For example, here we create a survey of 3 questions and add a survey rule to present the context of q1 in q2, but not q3. We inspect the prompts to verify what will be sent to the model (answers are ‘None’ because the survey has not been run yet):

Adding full context of prior questions and answers

We can also add a survey rule to present the full context of all prior questions and answers to later questions to each consecutive question. For example, here we revise the above survey to present the full context of all prior questions at each subsequent question:

Note, however, that this method can quickly produce very long question prompts as the set of questions grows.

Modifying prompts and instructions

In an upcoming post I’ll discuss and demonstrate built-in methods for modifying question type prompts and default instructions. These methods can also be useful in exploring and experimenting with agent memory!