Why AI social simulations will be transformative

The Simulmatics corporation, founded in 1959, aimed to predict the future using simulations (it's right there in the company name). Those simulations could be run on newly available digital computers, informed by the micro-data that was, by then, routinely collected and digitized. Applications were wide and spanned politics, government and industry: who would turn out to vote, how a message would land in a counter-insurgency campaign, whether readers find the plot in a novel compelling, and so on. And because you could fiddle with the simulation, you could estimate the effects of different choices—not just how would this message affect counter-insurgency efforts, but what message would work best (at least in simulation world).

The appeal of having a simulated model of the world is clear. Unlike in the real world, in a simulated world, we get to see the road not taken and the road taken. We can let time run forward at any speed we want, stop it, wind it back, change something and see what happens because of our change. We can try 1,000 different messages to one simulated person and they will never tire or complain; we can modify a hypothetical product in a hundred different ways—a product that in real life takes 6 months and costs $6M to modify—and get customer reactions. We can play out a jury trial with a variety of jury compositions and arguments. Even when fully deterministic, this process of simulation often reveals insights—anyone playing SimCity or Civilizations has surely experienced this sum-is-greater-than-parts phenomena.

But Simulamatics, the company, was a failure. Jill Lepore's book on their history, If/Then: How the Simulmatics Corporation Invented the Future, has a title that's a lie—or really metaphorical. Simulmatics didn't invent the future. What she meant by "inventing the future" is that our current focus on collecting data and trying to build models to make predictions was prescient and presaged by them. Collecting enormous amounts of data and using it to make predictions is our current day practice. Yet our analytics are often a pale imitation of what a simulation could offer.

Scintillating as it may be, a table of regression coefficients does not allow us to do all the open-ended knob-turning and exploration we can with simulations. Instead, when doing even serious analytic work, we have to make unpersuasive, often shaky arguments about how data from one domain will apply to some other domain—or hope that how people respond in a pencil-whipped survey will tell us about how they would respond in real life. Or hope that the people we can cajole in taking an online survey to win a free iPad are like people CIOs buying an ERP system. Perhaps the closest we come to this "two roads at once" feature of a good simulation is the A/B test. That we do and put up with all this crap anyway speaks to how right Simulatics was about the demand. We want to know the future now—or really, we want to know the possible futures so we can pick the one that suits us.

Simulations are like maps; most analytics are trip reports

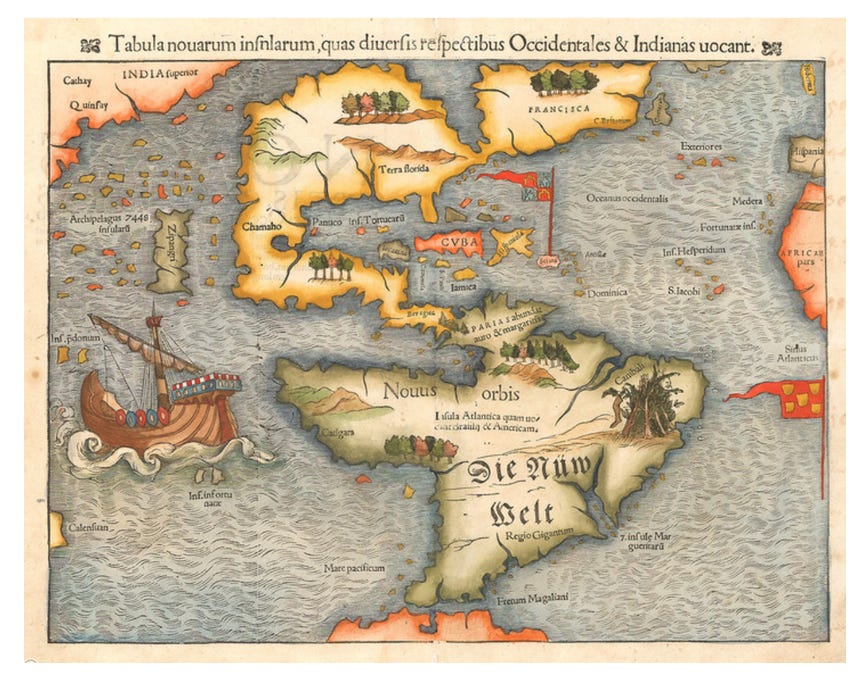

To make an analogy, a simulation is like a map. You can trace your finger on any path you like and have some sense of what that path will be like if you took it for real—steep or flat; wooded or grasslands; will I encounter a river? A mountain? Is there a pass through the range? The map will, of course, elide details. It will not tell you what kinds of flowers will be in the meadow, never mind whether they are in bloom when you hit the meadow. But you can be reasonably confident that the meadow is there, about where it is supposed to be. As such, you can use it to make plans. A map is a prediction technology and thus a planning technology. Of course, maps can be bad and maybe the meadow is not there. The "map is not the terrain" is a cliche but a cliche for a reason—it's true and important. This first map of the Americas basically gets it right but it has…some issues:

But as bad as this map is, it would actually be remarkably useful for planning, say, a voyage to California. Far better than nothing and it would lead you to good decisions; if you wanted to skip Tierra del Fuego and cross overland, better do it near Panama than Brazil. And even in 1505, I could simulate my journey (trace my finger on the map) at a speed faster than any human has traveled, and for free and without a ship. Neat trick.

Most analytics data is "Alice walked this particular path 3 months ago and here is her report on what she saw"; if our instrumentation is really top-notch, maybe we have a full video of this journey. This is…something. But we know precious little about what even a slight deviation from that path might mean and so generalizing Alice's experience to other paths we might take is a big leap. Maybe her report is "I walked across a vast desert" and in that case, we might implicitly make a map based on her report and use that for our planning. If we can do it, an A/B test is much better—we can send two scouting parties: Lewis goes the northern route (A) and Clark goes the southern route (B). If we can do this, it's great but we do only get two routes. This is of course, expensive and just gives us A and B, but at least we can make comparisons.

The main solution—explicit in the social sciences and rarely made explicit in business—is theory-building. We hope that with some general social science theories about how humans behave, we can use that theory to make predictions in new settings. Practically-minded businesses never describe themselves as theory building (too pretentious and too academic) but that is precisely what they are doing—creating theories about the things they care about that they hope will generalize. What is "know your customer" if not "building an implicit model of our customer so you can predict what they will do in new situations"?

Of course, social science theories face this tension between usefulness and parsimony and so they end up with theories not really up for prediction, at least compared to the natural sciences. There are a lot of reasons of course—one is simply that it's hard to incorporate all the factors that matter. But perhaps the most fundamental is that people are complex. They are nuanced, strategic thinkers. They reason about how others will react, which affects how they will react, which in turn affects the whole system. They think about the future and the past. They have memories and emotions. They can make plans and enlist others, using their mental model of others to help them coordinate. It's all great stuff but it makes predicting their aggregate behavior hard. What you need, for many applications, is a model of humans in your environment that is nearly as complex and subtle as actual humans.

Back to Simulatics: It was supply, not demand

Simulmatics failed not because there was no demand for what they proposed selling: they failed because they were about 65 years too early, technology-wise. They were founded in 1959 but what they wanted to do you can only do credibly in 2025. To make open-ended social simulations that actually work well and are very general, you need a reasonable simulacra of actual individual human behavior that can be plugged in: how we think, how we reason, how respond emotionally and rationally to stimuli. And we need these simulated humans to themselves generate stimuli that other simulated humans can respond to, and so on. This is just now possible with LLMs. They are, of course, not actual humans—but they are probably close enough for useful map-building. In a way, this is what economics does, with great success: we plug in a narrow model of humans (one that can do calculus when making decisions) and then "simulate" not numerically but by solving the equations implied by our set-up.

What we're doing: Tools for explorers, not making maps

Expected Parrot is not Simulmatics, in several important ways. Perhaps most fundamentally, Expected Parrot is not selling answers—we are making the tools that will let others find answers for the questions they care about. By creating open-source tools and a platform for sharing work, people can collectively explore what is possible with this technology, building on the work of others—which includes critiquing the work of others ("That's not where California is!").

Even with the potential for building these kinds of simulations, there is no guarantee that any particular simulation will be good or accurate—it depends greatly on how the simulations are designed, what data agents have, how they interact, and so on. And there are surely questions for which this approach does not work. But this "tools, not answers" is completely standard for powerful tools: Microsoft will not promise you the spreadsheet you make is good or will give you the correct answer—their commitment is to give you Excel, and that you can trust Excel to do what Excel claims to do—and then it is on you to use it appropriately.

Our vision is that our tools and services are so easy to use—but also so powerful—that the first question before any decision is "How can we simulate this?" and the answer is usually very clear and not much work: it is trivial to build agent pools, design studies, launch simulations, analyze results and plan follow-on experiments. And, critically, this is all done while it is always made clear what the gap is between the simulation and what we know about the real world, as best we can tell.

As a team, we are focused on very general tools because we believe that there is enough commonality across all would-be simulations that the tools can be "horizontal" without losing their power: that the same tools that would allow an education researcher to explore how different lesson plans affect learning could also be used for a product designer coming up with a new kind of yogurt packaging. We don't need "desert mapping tools" and "grasslands mapping tools" per se; a transit is a transit no matter what we use it for. While the domains are radically different, the fundamental object of inquiry is no different—what humans will do? We think there will be and can be a kind common core. Rather than homogenize, common tools will facilitate cross-fertilization, mixed methods and boundary-spanning work.

Future

As AI advances, we expect that researchers will work at higher levels of abstraction. Now we design research instruments; tomorrow we'll propose research questions and the AI will propose surveys; next week the AI will be suggesting what research questions we should even be asking and doing all the associated research. Our tools will automatically construct the right agents, run the right simulations, do the right analyses and present the key results—perhaps all within seconds. And as our knowledge of how to combine humans and AI answers improves (an active area of research), we'll get precise, automatically generated predictive models that optimally make use of both sources of information. One could think of it as an infinitely scrolling map (but flawed, like all maps) that gets built on the fly so quickly that it seems like the full map already exists. It's going to be a wild world and we're excited about helping to build it.