Validate your LLM answers with real respondents

Here's a quick example of methods for generating a web-based version of your LLM survey and analyzing human and LLM responses together at your workspace.

Sometimes it’s helpful to run your LLM-based survey with some real respondents. You can do this in EDSL using built-in methods for auto-generating a web-based version of your LLM survey and comparing human and LLM responses. Code for the quick example below is available in this downloadable notebook at Coop, our platform for creating and sharing AI research.

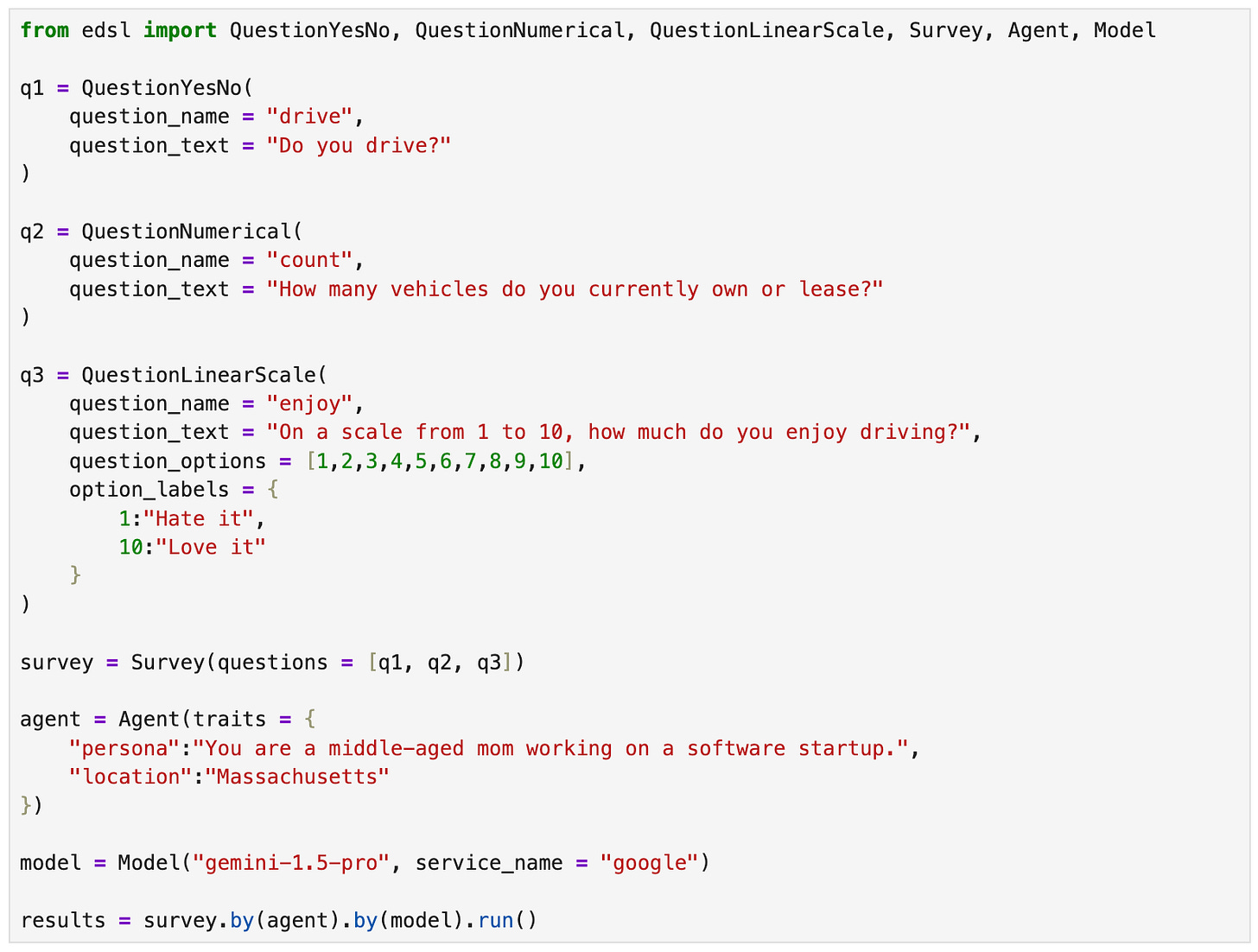

1. Create & run a survey in EDSL

Start by constructing questions in EDSL, our open-source package for running surveys and experiments with AI agents and LLMs. Choose from many common question types based on the form of the response that you want to get back from a model. You can optionally design personas for AI agents to answer the questions, and specify which of many popular LLMs you want to use to generate the responses. Run the survey by adding the agents and models to the survey and calling the run() method. This generates a formatted dataset of results that you can analyze with built-in methods:

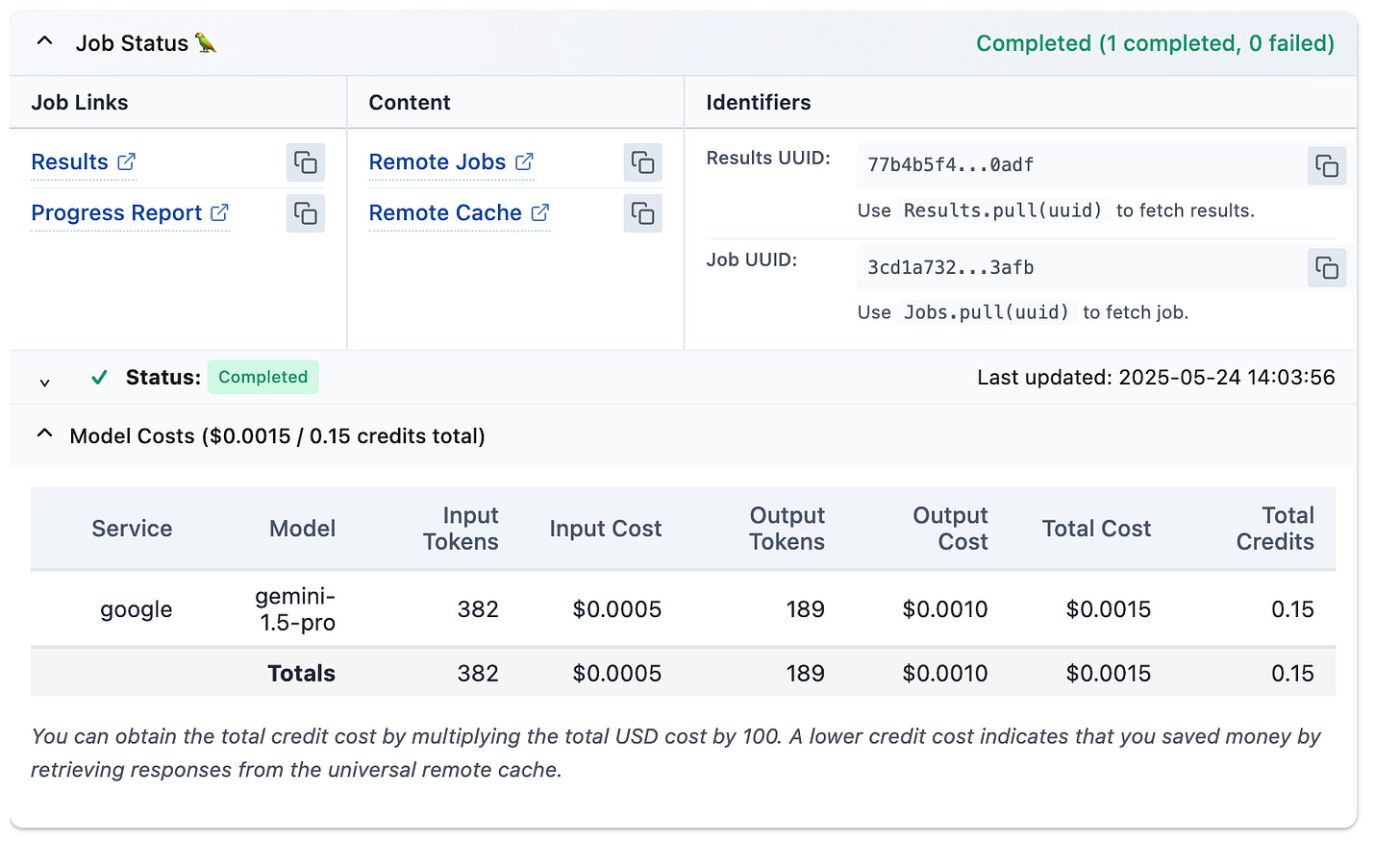

When you run your survey at Expected Parrot your results are automatically stored at your Coop account where you can access and share them. You can check a progress report while the survey is running, and see details on costs for each response when it is done:

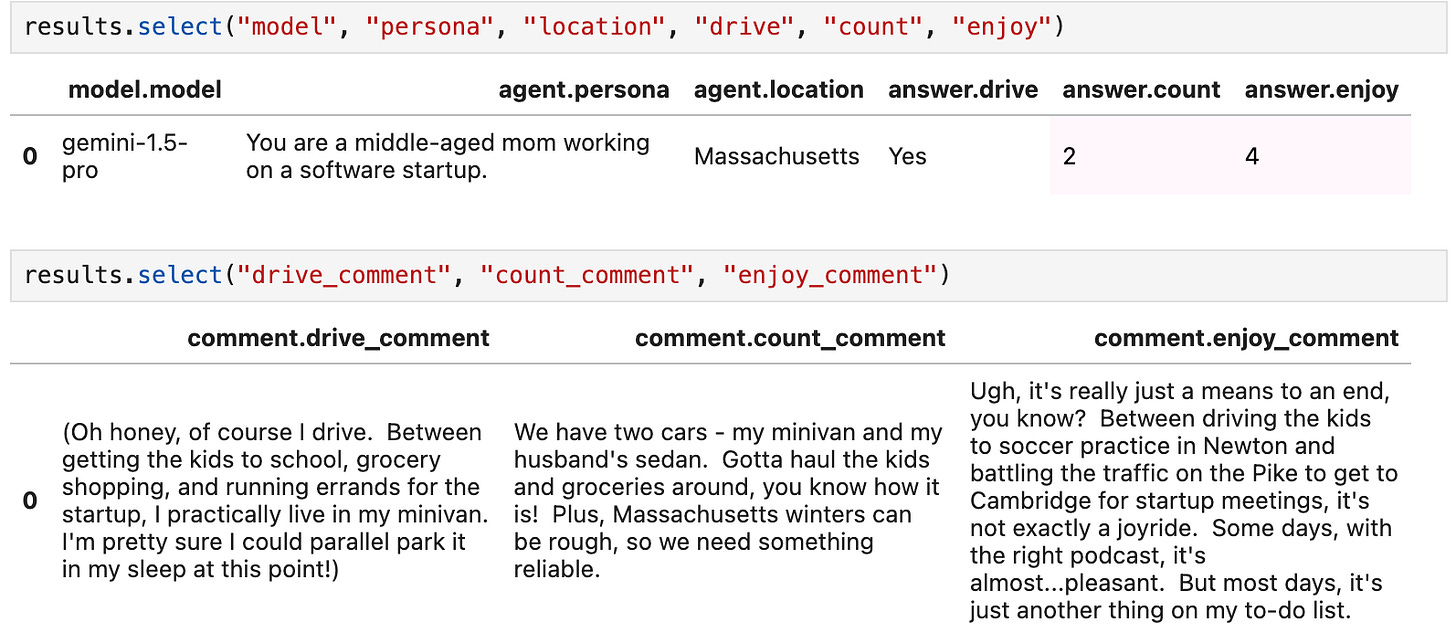

2. Inspect results

Results include information each component of the survey job: questions, prompts, agents, models, raw responses, costs, etc. You can inspect results at your Coop account, and also use methods for analyzing them at your workspace. Here we select the responses, and the comments that are automatically added to them (learn more about modifying user and system prompts for your specific research needs):

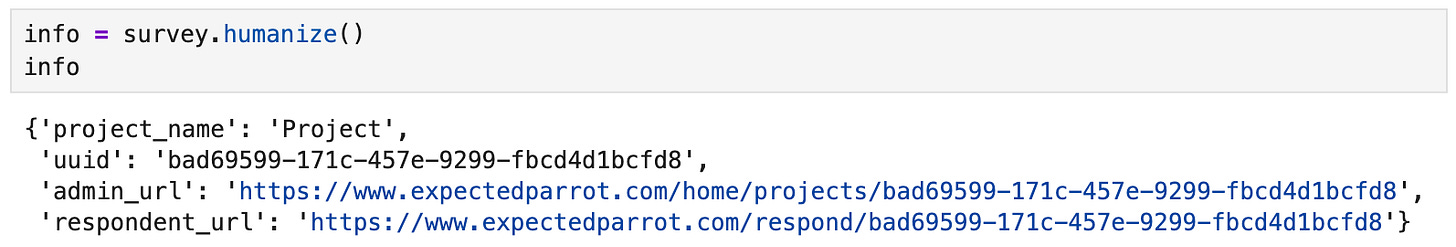

3. Generate a web-based version of the survey

To collect actual human responses to your survey, call the humanize() method on the Survey object to generate a web-based version of it. You’ll get a link that can be shared with anyone you want to answer the survey, and another link for accessing responses at your Coop account (the admin_url):

You can also use our interactive survey builder tool to construct a different web-based survey, or to edit the one you generated from your EDSL survey (e.g., if you want to add some screener questions for your real respondents).

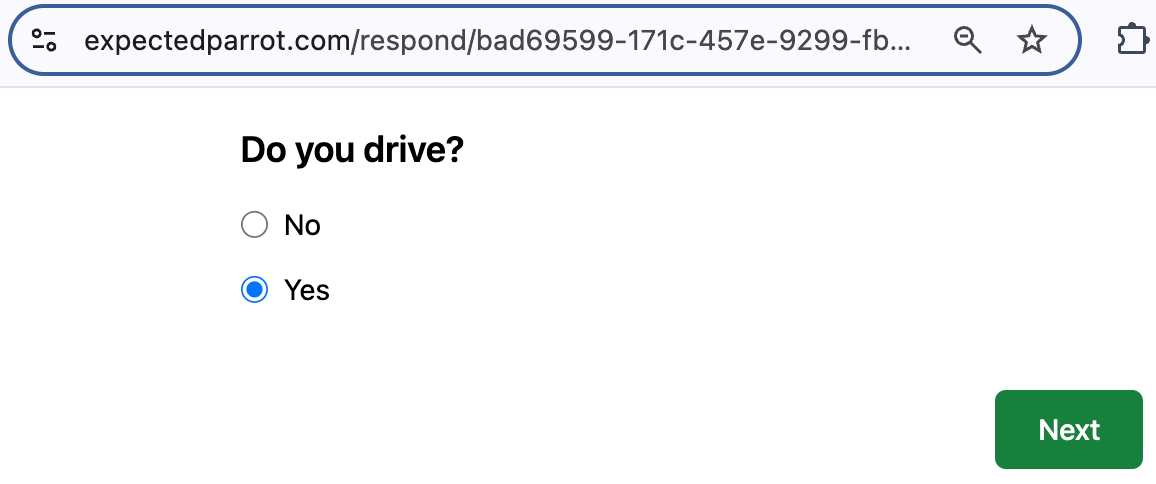

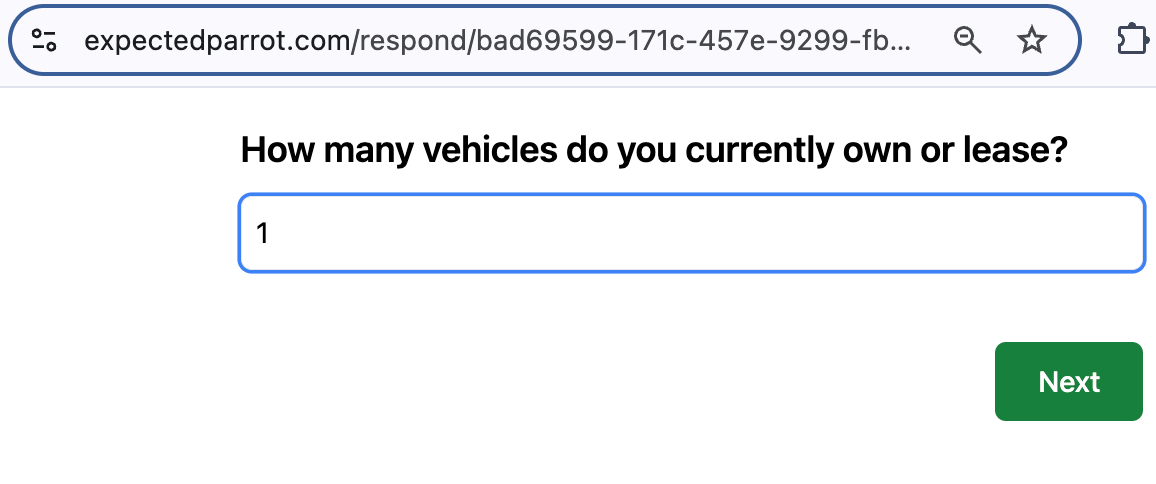

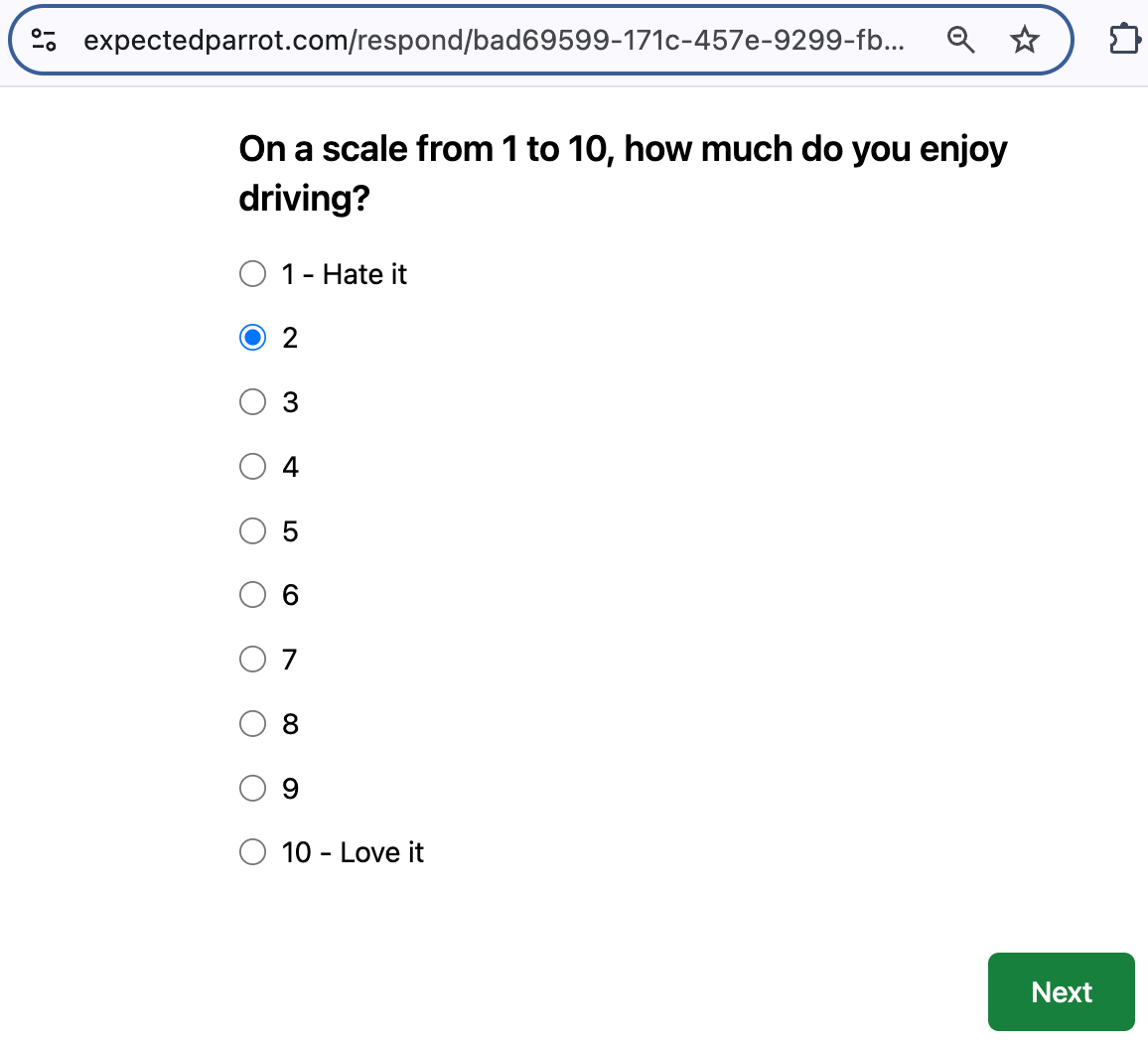

4. View & share the survey URL

Here I go ahead and answer the survey myself, as my response can offer a reliable check on the AI agent’s from above:

No problem!

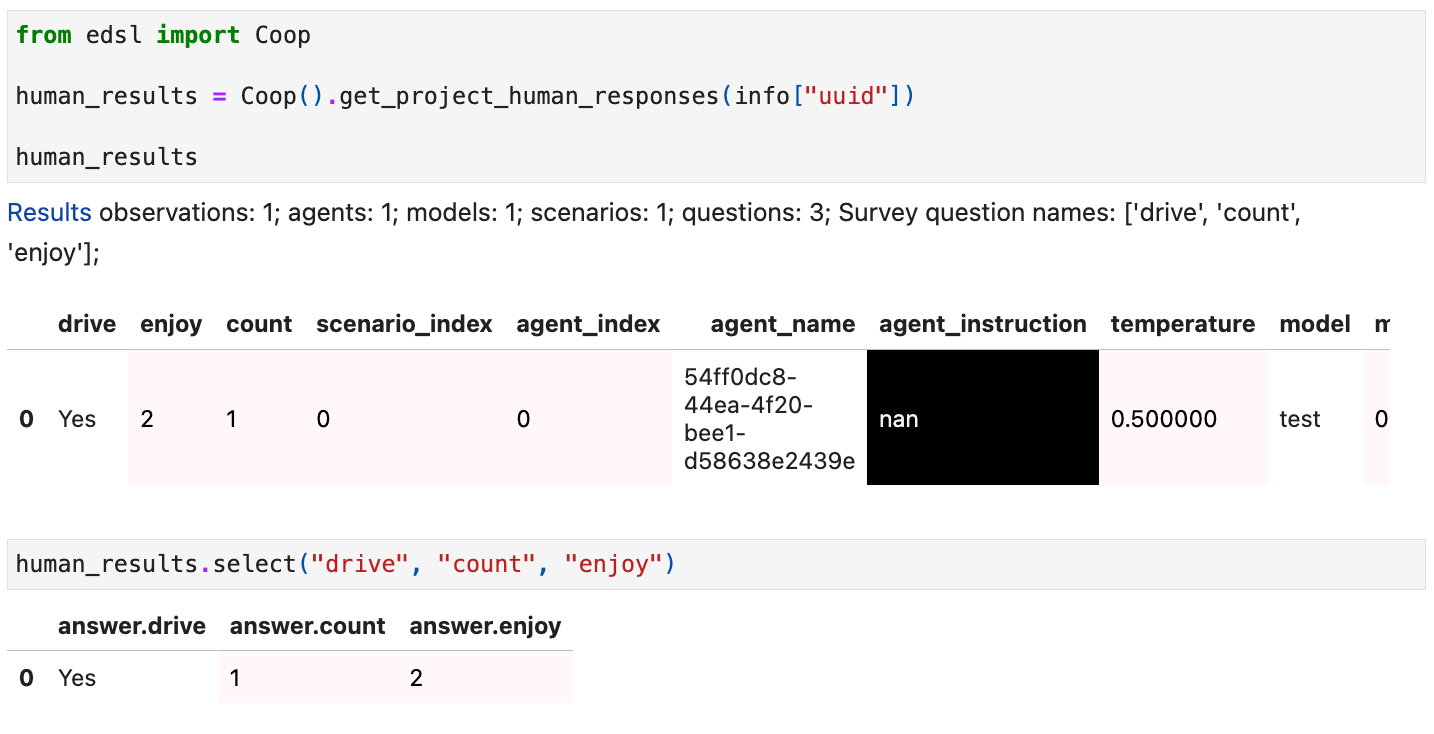

5. Combine & analyze results

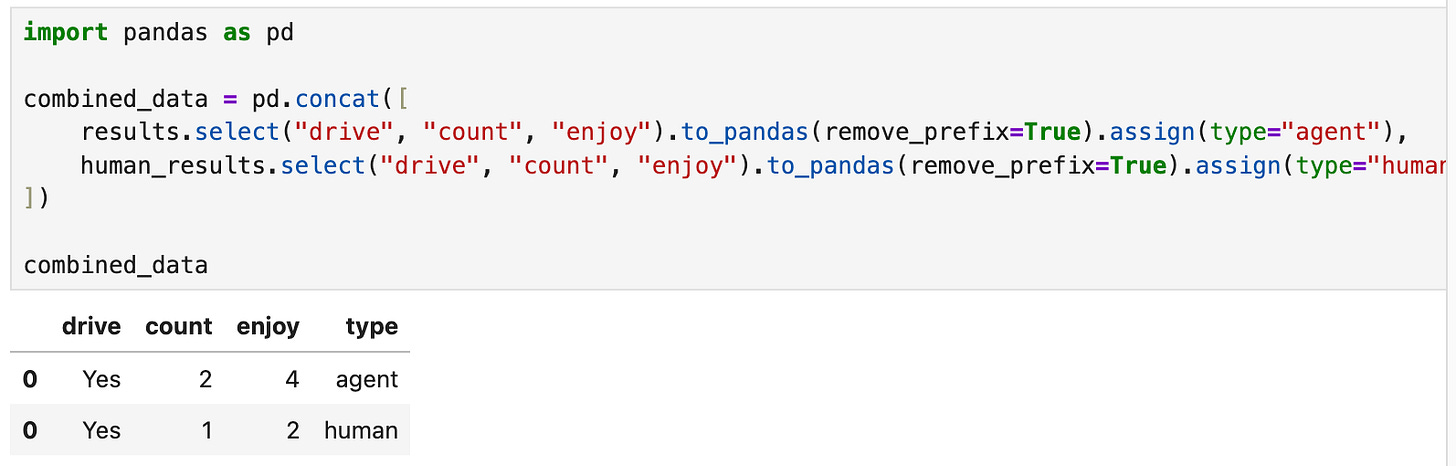

LLM and human results are datasets that you can analyze with built-in methods for working with results (e.g., to use them as inputs for follow-on questions for LLMs). You can also export them, e.g., as dataframes:

We’re always adding new methods like these — please send us your feature requests! Send us an email ([email protected]), post a message at our Discord or DM us on X.