I want to see how OpenAI's o3 compares to...

EDSL is an open-source tool that lets you readily compare performance for many language models at once.

Several key features of EDSL make it a convenient tool for comparing the performance of language models:

Unified access: Connect to multiple language model providers with a single API key and universal methods—no software engineering required

Structured data collection: Automatically formatted datasets of responses eliminate manual data cleaning work

Analysis tools: Built-in methods for analyzing, reproducing and exporting results

How it works

EDSL is an open-source Python package designed to simplify conducting experiments with AI agents and language models. A typical workflow consists of the following steps:

Create questions (free text, multiple choice, numerical, matrix, etc.)

Combine questions in surveys with custom logic (skip patterns, stop rules, etc.)

(Optional) Create personas for AI agents to answer the survey

Select language models to generate the responses

Analyze results in specified datasets

Simplified access to models

EDSL is designed for researchers who want to work with language models without spending lots of time on software development. It eliminates the need for one-off coding required to access individual service providers by letting you connect to them all in the same straightforward way.

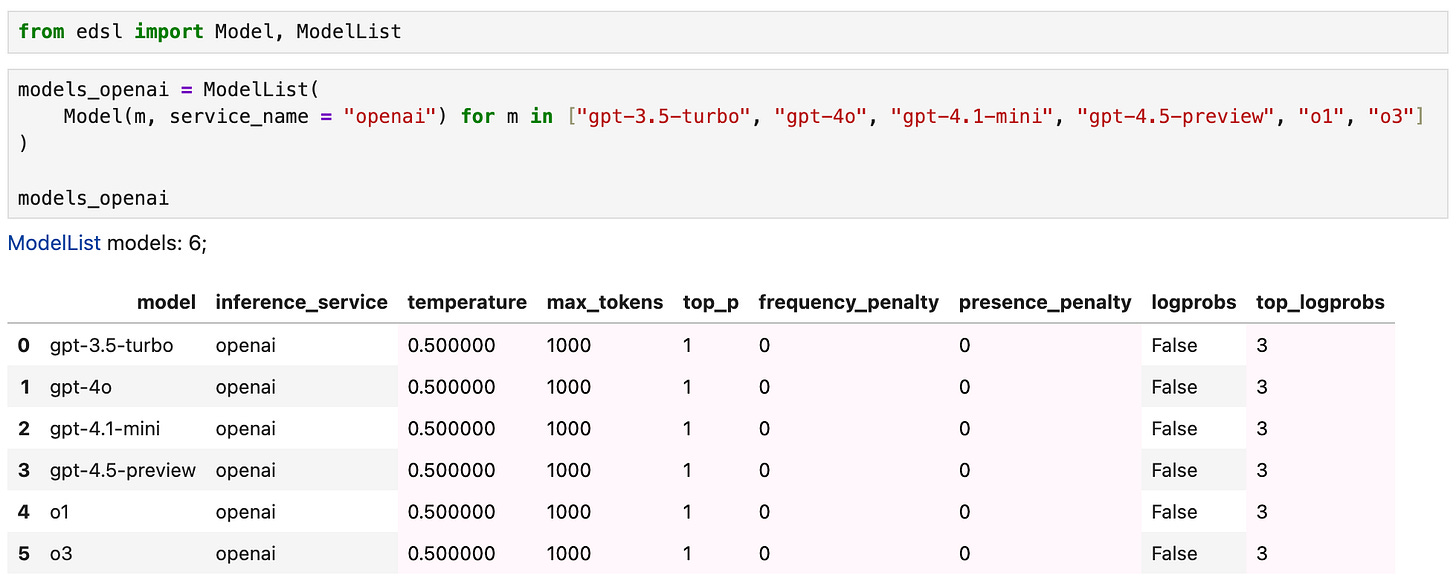

In EDSL, you can access models individually or collectively by simply selecting them and specifying desired parameters. For example, here we create a list of OpenAI models in order to use them all simultaneously with a survey when we run it. We can inspect the default parameters for the models that will be used:

You can also create multiple instances of the same model with different parameters. Here we create a new model list where we adjust the temperature of a single model:

Results as formatted datasets

Running a survey with multiple models at once allows us to readily compare responses in the dataset of results that is generated. Here we run a simple survey (consisting of a linear scale question and a numerical question—see examples of all question types) and inspect the models’ responses:

These results can be viewed at Coop as well:

Other columns of the results include:

User and system prompts

Question details

Agent traits and instructions

Model temperature and other settings

Raw and formatted responses

Log probs

Input and output tokens

Costs

Works with many models

EDSL works with many other popular language models as well. You can check available models, current token prices and daily performance on test questions here:

Please get in touch to request other service providers that you want to use!

Getting started

Our documentation page includes tutorials and demo notebooks for getting started using EDSL with language models, including examples of methods for evaluating model responses.

If you have a use case you don’t see, please see us a message and we’ll create a notebook for you!